I am quite eclectic in my quest for relevant information. Some of my immediate and easy to use findings are posted on my LinkedIn posts series. More sophisticated, lasting, seminal sources of information are included into the MustHave, a compilation of sources on the issues an Augmented Manager should better understand (available to any person supporting the research effort of the Boostzone Institute, here).

I found a very intriguing 2014 article this week which I wanted to share, not only for the happy few subscribing to the MustHave, but to all since I found it particularly rich. This academic research — don’t try to even understand half of it if you are not really advanced in AI and in particular in neural networks dynamics and in mathematics — points out to the fact that a relatively simple modification in pictures analyzed by AI systems can blur the machine perception to an incredible point, while you and me, looking at the blurred picture and at the original one would not see any difference.

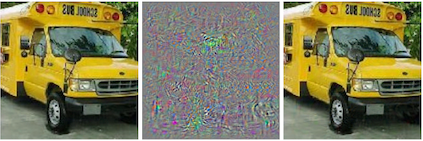

The picture above, the left part, led to an accurate evaluation by an AI, the right one, modified by the noise introduced in the middle picture, led the AI to say that it was ostriches.

This is a research paper not trying to draw too many conclusions except on how to better train neural networks, how to better manage databases, etc. But for us as citizens and leaders, this confirms the fact that AI is fallible (the best informed know already clearly this fact) but that AI can also (relatively easily) be cheated.

This has obviously positive consequences like for the demonstrators in Hong Kong creating image noise via their tee-shirts blurring the face recognition systems of the authorities. It may have plenty of other consequences, positive and negative. I let you imagine which ones. For me it mostly confirms the view that, however useful an AI can be, it is only a tool helping humans, not an expert to trust. As a viewer I don’t care that the machine does not recognize properly the pictures once so slightly modified since I can recognize them. But I do care a lot if those mistakes may lead to misinterpretations that could impact our lives if anybody trusts more the machine than it can legitimately be trusted.

Have a good read and train your own neurons…